The Australian Computer Society (ACS), of which I am a recent member, has published its Digital Pulse 2024, written by Deloitte, and… I’m not sure what they were thinking.

Well, the part on diversity is important. Let’s make that clear to begin with. There are significant imbalances in the tech industry regarding gender, First Nations representation, culture, age, disability, neurodiversity and geographic location. And we need to work on all these things, because participation in tech needs to be representative of the people whose lives it affects, which is essentially everyone.

That’s the highlight of the report. And then there’s the bit on Generative AI, of which, you may recall, I’m not a massive fan.

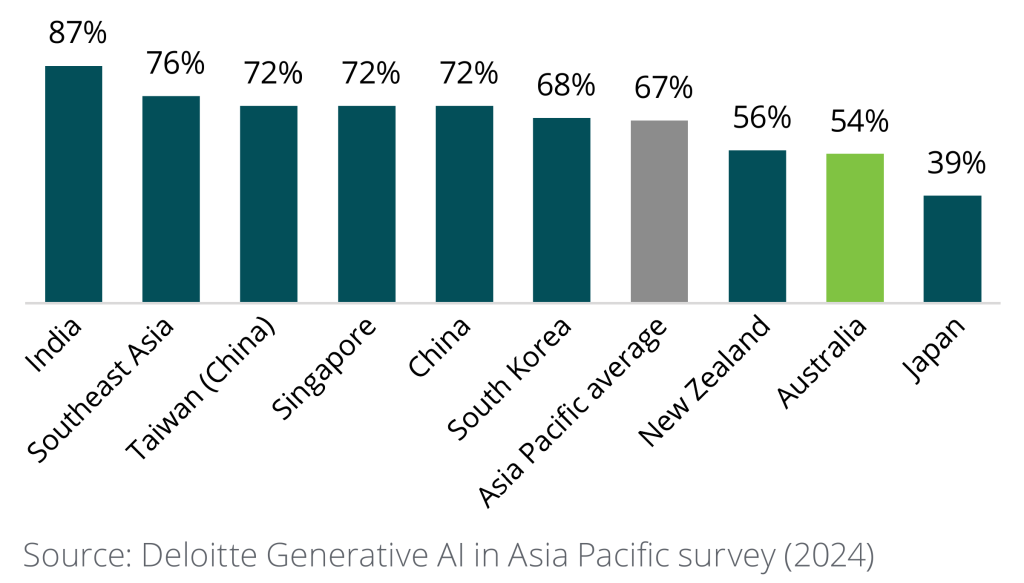

The report proclaims Australia has a “generative AI gap”, compared to other Asia-Pacific nations. Specifically, we have less “AI talent”, we use less generative AI, we invest less in generative AI, and we don’t think our workplaces are set up for it. The report warns that “Additional action to encourage greater development of workforce skills related to AI will be required for Australia to close the generative AI gap.”

Here’s a graph from page 35 of the report:

My problem, in a nutshell, is that I don’t see the problem. This is good news! Well, selfishly parochial good news, and purely in a relative sense. Australia is wasting less time, energy, and reputation on what is essentially a technological cult.

This section of the report really is outrageously credulous. I don’t take issue with the figures cited (obtained through various surveys), but the interpretation of the data is brazenly beholden to motivated reasoning.

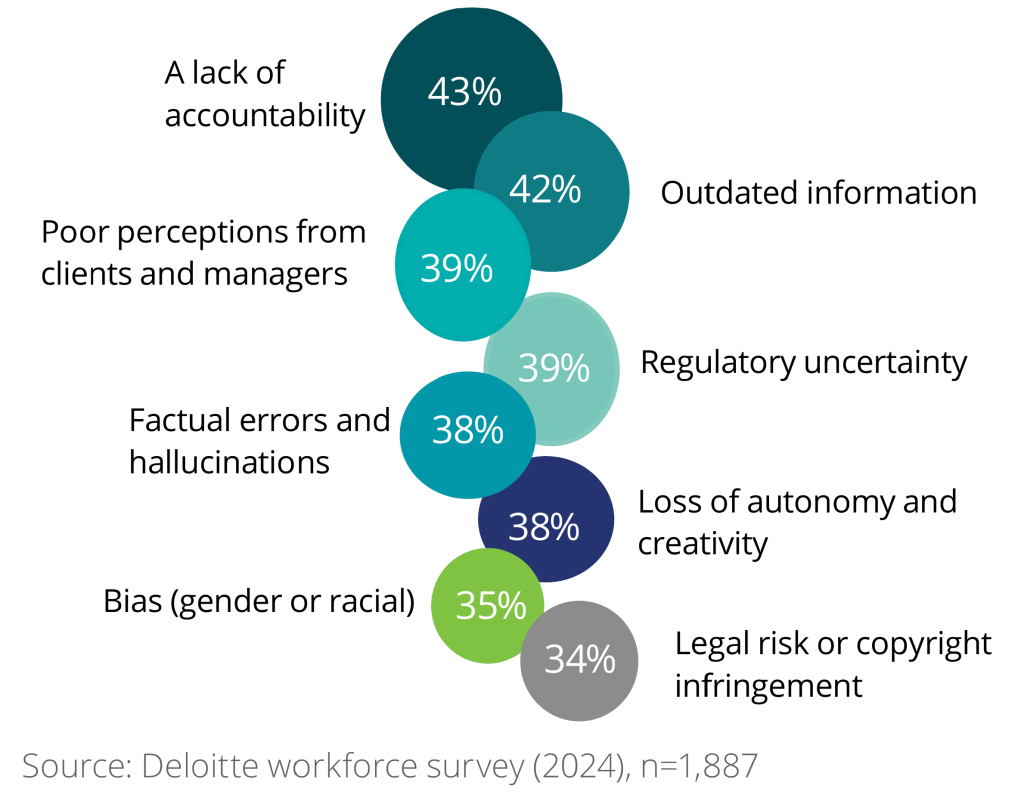

There is no reflection in the report on whether generative AI is, on balance, a force for good or not. Nor are there any citations to that effect. The report simply presumes it to be, even while presenting a chart-oid on all the things wrong with it (page 38):

A lot of these issues are absolutely fundamental to the technology, or to human interaction with the technology. Yet, the report stares them down and just burbles on about using “AI tools responsibly”, establishing “systems to manage AI risks” and “pragmatic approaches”.

Did I just have a stroke?

The report identifies, right there, a range of reasons not to use generative AI, and its conclusion is “hold my beer”? Can you imagine if we talked about gun control this way in Australia? Obviously we know that assault weapons dramatically increase the risk of mass shootings, so our challenge, given that we largely don’t have assault weapons, is to introduce them while “managing the risk”. Right. Yes. Thanks for your time.

My take on this chart is to question why those numbers are so low, especially for “factual errors and hallucinations” and “bias”. These problems are uncontroverted fact, not just opinion, and if only 35%-38% of respondents have encountered them, that’s the gap we need to close. We need to educate everyone in how bad the technology is.

I also fail to see where the report addresses “A lack of accountability”, other than off-handedly mentioning it in the chart, as if it’s just something some weirdos inexplicably kept bringing up in the survey. Just a mystery of the universe, eh?

We could really use some accountability in the tech industry, of all places. There isn’t much of it to begin with, and given the enormous potential for generative AI to screw things up, it’s only going to become more important. If we’re not serious about accountability, then we’re not serious about ethics, and then what the hell are we doing?

So, ACS/Deloitte, I’m not super-confident you really have your finger on the pulse here. You didn’t use ChatGPT, did you?